DeepSeek Coder:

Let the Code Write Itself AI-Powered Code Generation

In the ever-evolving world of software development, efficiency and precision are paramount. DeepSeek Coder, an innovative open-source AI model, is transforming the coding landscape by offering advanced code generation, completion, and debugging capabilities across a multitude of programming languages. This comprehensive guide delves into the features, performance benchmarks, and integration methods of DeepSeek Coder, highlighting its significance in modern software development and also their apk version available.

What is DeepSeek Coder?

DeepSeek Coder is a series of code language models meticulously trained from scratch on a vast dataset comprising 87% code and 13% natural language in both English and Chinese. Each model undergoes pre-training on 2 trillion tokens, with versions ranging from 1 billion to 33 billion parameters. This extensive training enables the models to understand and generate code effectively, catering to various development needs.

Key Features of DeepSeek Coder

- Support for Over 80 Programming Languages: DeepSeek Coder’s versatility allows it to assist developers working in a wide array of programming languages, enhancing its applicability across different projects.

- Extended Context Window: With a context window of up to 16,000 tokens, DeepSeek Coder can handle large codebases, providing project-level code completion and infilling. This feature is particularly beneficial for complex projects requiring comprehensive code understanding.

- Open-Source Accessibility: As an open-source project, DeepSeek Coder allows developers to access, modify, and integrate the model into their projects without restrictions, fostering innovation and customization.

Performance Benchmarks

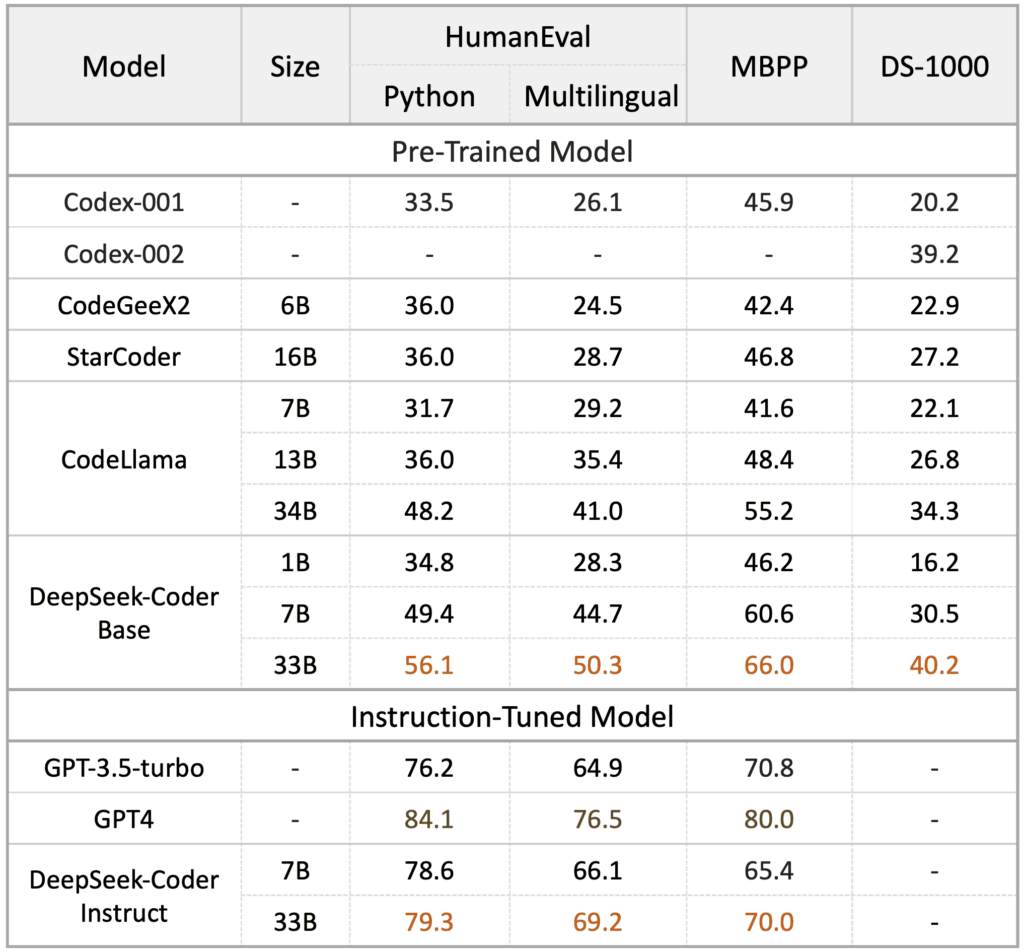

DeepSeek Coder has been evaluated on various coding-related benchmarks, showcasing its exceptional capabilities:

- HumanEval Python: Outperforms existing open-source code language models by a significant margin.

- HumanEval Multilingual: Demonstrates superior performance across multiple programming languages.

- MBPP (Multiple Programming Problems Benchmark): Exhibits advanced problem-solving skills in diverse coding tasks.

- DS-1000: Achieves high accuracy in code understanding and generation tasks.

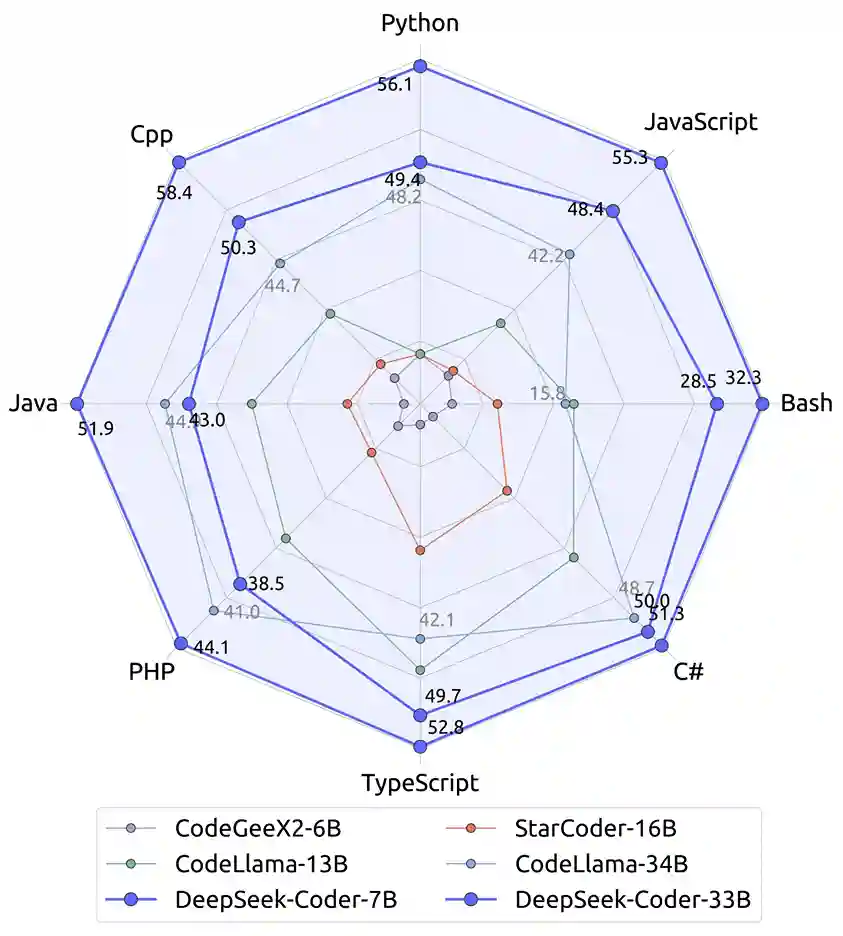

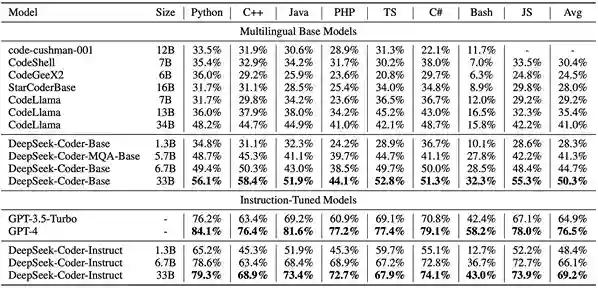

Performance of different Code LLMs on Multilingual HumanEval Benchmark

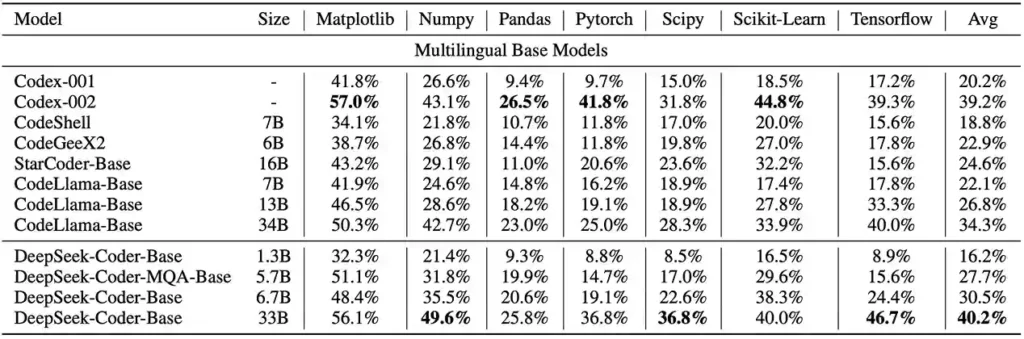

Performance of different Code LLMs on DS-1000 Benchmark

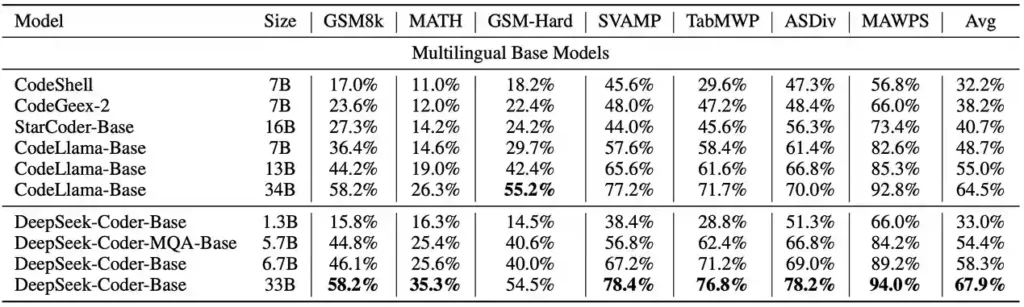

Performance of different Code Models on Math-Reasoning Tasks

Notably, the DeepSeek-Coder-Instruct-33B model, after instruction tuning, surpasses GPT-3.5-turbo on HumanEval and achieves comparable results on MBPP.

How to Access DeepSeek Coder

Developers can interact with DeepSeek Coder through various platforms:

- Chat Interface: Engage with the model via the official chat platform.

- GitHub Repository: Access the source code, documentation, and contribute to the project.

- Model Downloads: Obtain pre-trained model weights from Hugging Face for integration into custom applications.

Getting Started with DeepSeek Coder

To begin utilizing DeepSeek Coder in your development workflow:

- Visit the Official Website: Explore the features and capabilities of DeepSeek Coder.

- Access the Chat Platform: Interact with the model for code generation and assistance.

- Explore the GitHub Repository: Review the source code, access detailed documentation, and join the community of contributors.

- Download Models from Hugging Face: Integrate the pre-trained models into your projects for customized applications.

Conclusion

DeepSeek Coder represents a significant advancement in code language models, offering developers a powerful tool to enhance coding efficiency and accuracy. Its open-source nature, combined with state-of-the-art performance, makes it a valuable asset for the global developer community.

For more information, visit the official DeepSeek Coder website or access the resources available on GitHub and Hugging Face.